Your model has 500 million parameters. Your GPU has 16GB of memory. The model needs 40GB. Before GPipe, you were stuck. This paper introduces pipeline parallelism: split the model across devices and keep them all busy.

Why Sutskever Included This

Model scale drives capability. GPT-3 has 175 billion parameters. Training it requires distributing computation across many devices. GPipe introduced the pipeline parallelism approach that made such models trainable. The techniques here underpin modern large language model infrastructure.

The Memory Problem

Training consumes more memory than inference. You store activations from the forward pass for use in the backward pass. For large models, these activations alone exceed GPU memory.

Data parallelism (replicating the full model on each device) doesn't help. The model still needs to fit on each device. You need a way to split the model itself.

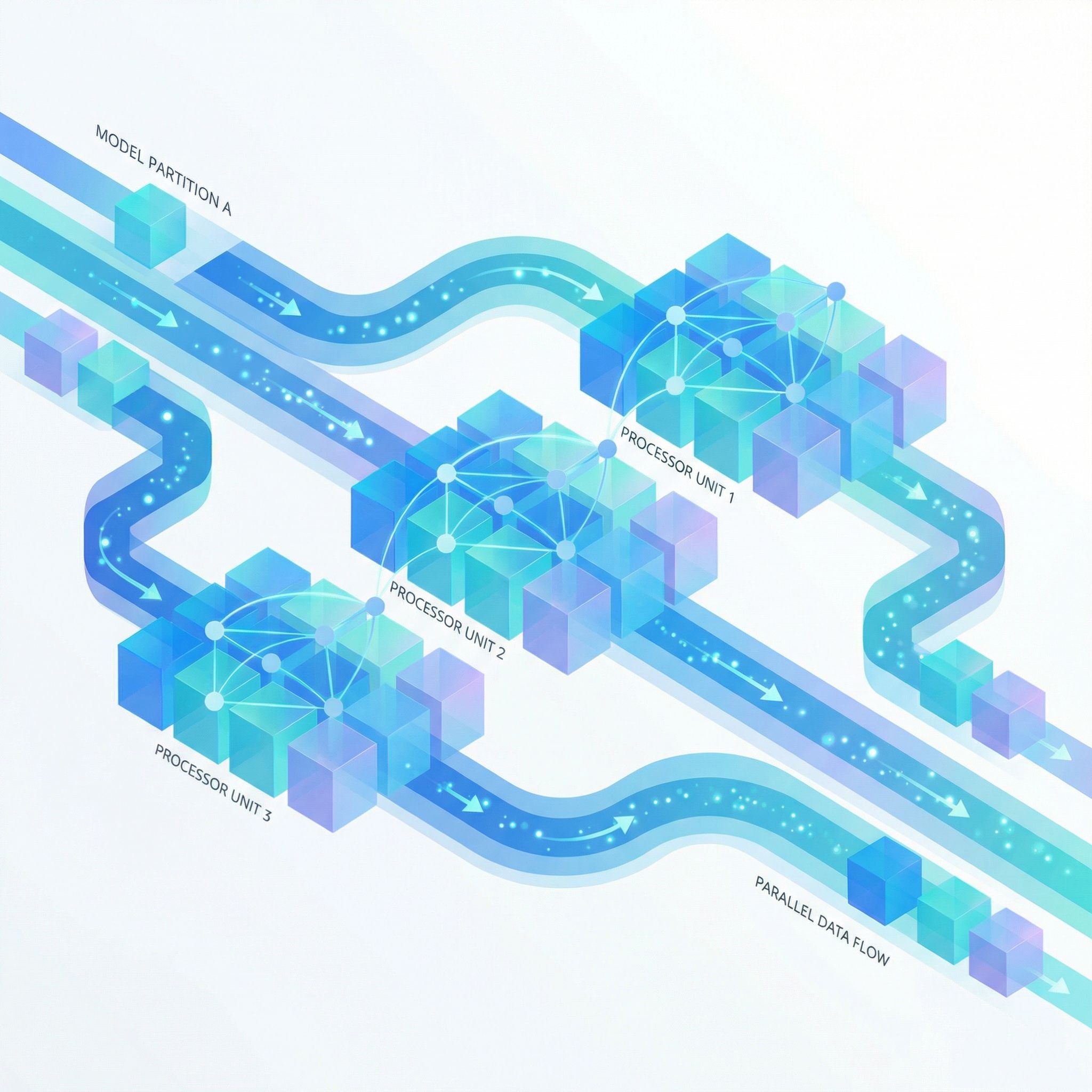

Pipeline Parallelism

Divide the model into K sequential segments. Assign each segment to a different device. Data flows through: Device 1 processes layers 1-10, sends activations to Device 2, which processes layers 11-20, and so on.

The problem: with one batch, most devices sit idle. Device 2 waits for Device 1 to finish. Device 3 waits for Device 2. During the backward pass, the same happens in reverse. Most time is wasted.

Single batch across 4 devices:

Device 1: [WORK][-idle-][-idle-][-idle-][BACK]

Device 2: [-idle-][WORK][-idle-][-idle-][-idle-][BACK]

Device 3: [-idle-][-idle-][WORK][-idle-][-idle-][-idle-][BACK]

Device 4: [-idle-][-idle-][-idle-][WORK][-idle-][-idle-][-idle-][BACK]

Micro-Batching

Split each mini-batch into M smaller micro-batches. Feed them through the pipeline in sequence. While Device 2 processes micro-batch 1, Device 1 processes micro-batch 2.

The pipeline fills up. Devices work in parallel on different micro-batches. The bubble (idle time at start and end) shrinks relative to useful work.

Bubble fraction = (K - 1) / (K - 1 + M)

With 4 devices and 16 micro-batches: bubble = 3/19 ≈ 16%. The pipeline runs at 84% efficiency.

F-then-B Schedule

GPipe uses a specific execution order. All M micro-batches complete their forward passes through the entire pipeline. Then all M micro-batches complete their backward passes in reverse order.

This simplifies implementation. Each device finishes all forward work before switching to backward work. The alternative (interleaving forward and backward for each micro-batch) requires more complex scheduling.

Re-materialization

Storing activations for M micro-batches across K partitions eats memory. GPipe trades computation for memory: store only the activations at partition boundaries. During the backward pass, recompute the intermediate activations within each partition.

This technique (also called gradient checkpointing) existed before GPipe but is essential to making pipeline parallelism practical. Memory scales with the number of partitions, not the number of layers.

Practical Tradeoffs

More micro-batches reduce bubble overhead but increase memory for storing gradients. The paper suggests M ≈ 4K as a reasonable balance.

More partitions allow larger models but increase bubble fraction. You want enough partitions to fit the model, not more.

Re-materialization adds ~25% computation overhead. Worth it when memory is the constraint.

What GPipe Enabled

The paper trained AmoebaNet-B with 557 million parameters on ImageNet, achieving state-of-the-art accuracy in 2018. More significantly, it demonstrated that pipeline parallelism scales to models that can't fit on single devices.

Modern systems combine pipeline parallelism with data parallelism and tensor parallelism. GPipe established one leg of this three-legged approach to training massive models.

Limitations

Pipeline parallelism works for models with sequential structure. Highly branched architectures don't partition cleanly. The technique also introduces latency: results take time to flow through the pipeline.

Synchronous training (which GPipe uses) blocks until all devices complete. Asynchronous variants exist but introduce optimization challenges.

Connection to Modern Training

The systems training GPT-4 and Claude use pipeline parallelism alongside other techniques. Megatron-LM, DeepSpeed, and other frameworks build on GPipe's core ideas.

Paper #22 in this series (Scaling Laws) explores why we want such large models. GPipe explains how to train them.

Further Reading

More in This Series

- Paper #1: Why Coffee Mixes But Never Unmixes

- Paper #2: The Unreasonable Effectiveness of RNNs

- Paper #3: Understanding LSTM Networks

- Paper #4: Recurrent Neural Network Regularization

- Paper #5: Keeping Neural Networks Simple

- Paper #6: Pointer Networks

- Paper #7: AlexNet

- Paper #8: Order Matters - Seq2Seq for Sets

Part of a series on Ilya Sutskever's recommended 30 papers, connecting each to practical AI development.