In 2012, Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton entered a convolutional neural network in the ImageNet competition. It cut the error rate nearly in half. Within two years, almost every leading computer vision paper used neural networks. Before AlexNet, almost none did.

Why Sutskever Included This

Sutskever is a co-author. This paper launched his career and demonstrated that deep learning could solve real problems at scale. The techniques developed here—GPU training, ReLU activations, dropout—became standard practice. AlexNet has been cited over 170,000 times.

The ImageNet Challenge

ImageNet contains over a million labeled images across 1,000 categories. The annual Large Scale Visual Recognition Challenge (ILSVRC) measured progress in image classification. Before 2012, the best systems used hand-designed features (SIFT, HOG) fed into shallow classifiers like SVMs.

These systems improved incrementally. The 2010 winner achieved 28.2% top-5 error (meaning the correct label wasn't in the system's top 5 guesses 28.2% of the time). The 2011 winner reached 25.8%. Progress was steady but slow.

AlexNet achieved 15.3% top-5 error. The gap between first and second place was larger than all previous years of progress combined.

The Architecture

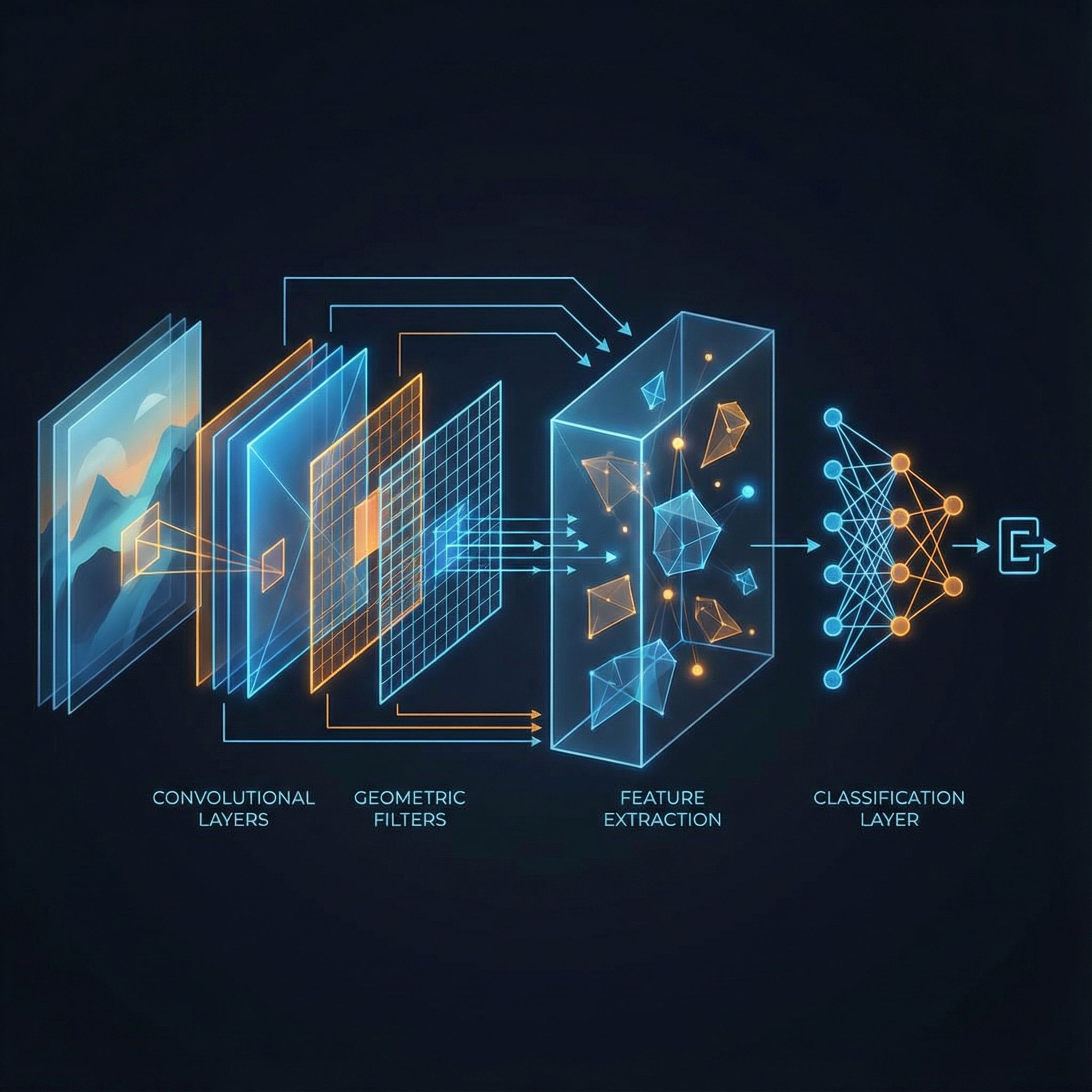

AlexNet had eight layers: five convolutional and three fully connected. This sounds modest now, but it was deep for 2012. The network had 60 million parameters and 650,000 neurons.

The convolutional layers learned hierarchical features automatically. Early layers detected edges and color gradients. Middle layers combined these into textures and patterns. Later layers recognized object parts and eventually whole objects. No human specified what features to look for.

Layer 1: 96 filters, 11x11, stride 4 → edges, colors

Layer 2: 256 filters, 5x5 → textures

Layer 3-5: 384, 384, 256 filters, 3x3 → parts, objects

FC layers: 4096, 4096, 1000 → classification

ReLU: Faster Training

Previous networks used sigmoid or tanh activations. These functions saturate: for large inputs, the gradient approaches zero. Deep networks trained slowly because gradients vanished as they propagated backward through saturating layers.

AlexNet used ReLU (Rectified Linear Unit): f(x) = max(0, x). For positive inputs, the gradient is 1. No saturation, no vanishing gradients. The paper showed that ReLU networks trained several times faster than tanh networks on ImageNet.

ReLU had been proposed before, but AlexNet demonstrated its effectiveness at scale. It became the default activation for deep networks.

GPU Training

Training AlexNet on CPUs would have taken weeks. Krizhevsky implemented the network on two NVIDIA GTX 580 GPUs, each with 3GB of memory. The model was split across GPUs, with some layers communicating between them.

Training took about a week. This made experimentation feasible. Without GPUs, the architecture couldn't have been developed through iterative refinement.

The paper established GPUs as essential for deep learning research. Every subsequent advance in computer vision, NLP, and generative models relied on GPU acceleration.

Dropout: Regularization That Works

With 60 million parameters and only 1.2 million training images, overfitting was inevitable. The paper used dropout in the fully connected layers: during training, each neuron was randomly set to zero with probability 0.5.

Dropout forces the network to learn redundant representations. No single neuron can memorize training examples because it might be absent during any given forward pass. This improved generalization significantly.

Paper #4 in this series (RNN Regularization) built on dropout for recurrent networks. The technique originated in Hinton's group around the same time as AlexNet.

Data Augmentation

The paper artificially expanded the training set through transformations. Random crops from 256x256 images produced 224x224 inputs. Horizontal flips doubled the effective dataset size. RGB color jittering added variation in lighting.

At test time, the network averaged predictions across multiple crops and flips. This ensemble approach improved accuracy without additional training.

Data augmentation became standard practice. Modern systems use more sophisticated augmentations, but the principle remains: more varied training data improves generalization.

Local Response Normalization

AlexNet included a form of local normalization inspired by neuroscience: neurons that fire strongly suppress their neighbors. This helped slightly but was later abandoned. Batch normalization (introduced in 2015) proved more effective and became the standard.

Not every innovation in AlexNet survived. The lasting contributions were the overall approach (deep CNNs trained end-to-end on GPUs) and specific techniques like ReLU and dropout rather than every architectural detail.

The Competition

AlexNet won ILSVRC 2012 decisively. Second place used hand-engineered features and achieved 26.2% top-5 error. The neural network approach wasn't just better; it operated on different principles entirely.

The victory forced the computer vision community to reckon with a paradigm shift. Researchers who had spent careers developing feature engineering techniques suddenly needed to learn deep learning. Conferences that had rarely accepted neural network papers began publishing them extensively.

After AlexNet

Krizhevsky, Sutskever, and Hinton founded DNNresearch and sold it to Google in 2013. Sutskever later co-founded OpenAI. Hinton joined Google Brain. All three received the Turing Award in 2018 for their work on deep learning.

Subsequent ImageNet winners went deeper: VGGNet (19 layers, 2014), GoogLeNet (22 layers, 2014), ResNet (152 layers, 2015). Each reduced error rates further, but the conceptual breakthrough was AlexNet's. The others were refinements.

Why This Matters Today

AlexNet established that deep learning could outperform decades of specialized research in a well-studied domain. Computer vision experts had optimized hand-crafted features for years. A general-purpose learning algorithm, given enough data and compute, surpassed all of it.

This pattern repeated in speech recognition, machine translation, game playing, and eventually language modeling. The lesson: end-to-end learning with sufficient scale often beats domain-specific engineering. AlexNet was the first large-scale proof.

Further Reading

More in This Series

Part of a series on Ilya Sutskever's recommended 30 papers, connecting each to practical AI development.