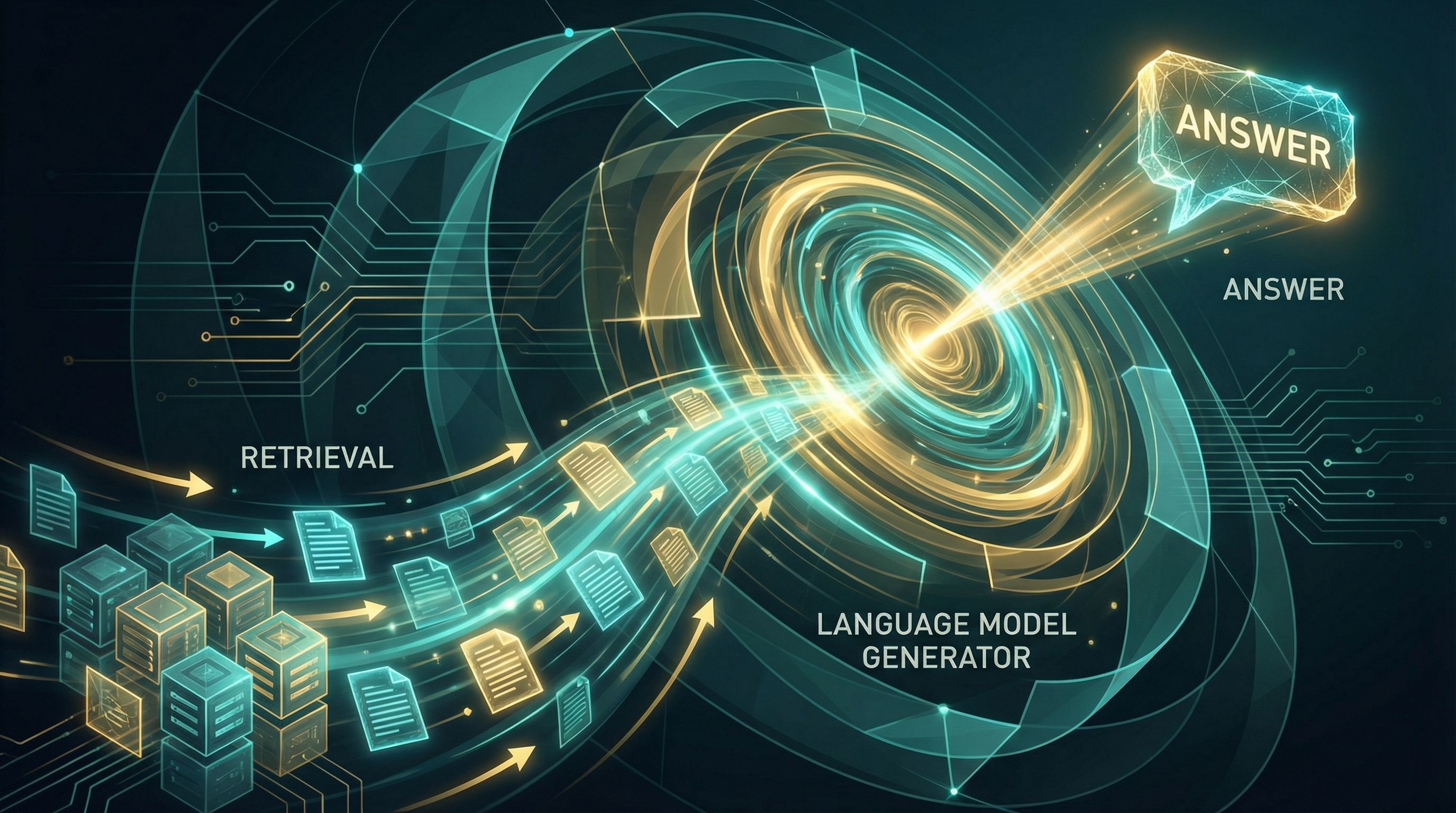

Language models hallucinate. They generate plausible-sounding text without checking facts. RAG fixes this by retrieving relevant documents and conditioning generation on them. External knowledge meets powerful generation.

Why Sutskever Included This

Pure parametric models encode knowledge in weights, fixed at training time and prone to hallucination. RAG augments generation with retrieval, enabling dynamic access to external knowledge. This architecture powers modern question-answering and knowledge-intensive applications.

The Architecture

RAG combines two components:

Retriever: Dense Passage Retrieval (DPR) finds relevant documents from a corpus based on the input query.

Generator: A seq2seq model (like BART) produces answers conditioned on the query plus retrieved documents.

P(y|x) = Σ P(z|x) · P(y|x,z)

The probability of answer y given query x marginalizes over retrieved documents z. Each document contributes to the final answer weighted by its relevance.

Two Variants

RAG-Sequence: Each retrieved document independently generates a complete answer. Final output combines these weighted by retrieval scores. Best for single-fact questions.

RAG-Token: Different documents can influence different output tokens. The generator can synthesize information from multiple sources within a single response. Better for complex questions requiring multiple facts.

Advantages

Factual grounding: Retrieved documents provide evidence. The model generates from established facts rather than memorized patterns.

Interpretability: Retrieved documents show where the answer came from. Users can verify sources.

Updatability: Knowledge base changes don't require retraining. Update the corpus; retrieval adapts automatically.

Efficiency: Smaller models achieve strong performance by offloading knowledge to retrieval.

Limitations

Retrieval errors propagate to generation. If wrong documents are retrieved, the answer will be wrong. Retrieval also adds latency compared to pure generation.

The "Lost in the Middle" problem (Paper #30) affects RAG: models struggle to use information from middle positions of retrieved context.

Further Reading

More in This Series

Part of a series on Ilya Sutskever's recommended 30 papers.